|

|

|

|

|

|

|

|

|

Extraypork

|

|

|

|

|

|

This could perhaps go in with the other raytracers, but the technique is different enough (and the actual raytracing bad enough) that I'll give it a separate project page.

The following description is grabbed from the readme, since I ended up spending so much time writing that:

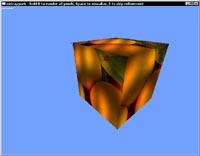

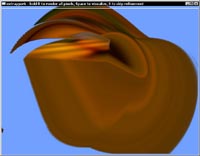

Experimental raytracing optimization using screenspace motion vectors.

-

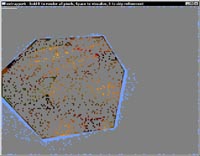

Hold SPACE to show which parts of the screen are actually rendered each frame.

The grey areas are extrapolated from the previous framebuffer using motion vectors computed through partial raycasting once per 4x4 pixel square.

Holding R will force full raytracing of every pixel on screen, for comparison.

The white line indicates percentage of pixels/coverage being rendered.

The motion vectors are calculated by shooting a ray and storing the 3D world position of the first point hit. This point is then inversely transformed using the camera and scene setup from the previous frame (this is just a normal perspective projection as used in rasterizers) to get a source screen point. Actual pixel extrapolation is then performed by rendering a simple displacement map, where each target pixel is set to the bilinearly sampled source point associated with that screen position (sampled from the previous framebuffer).

The demo is limited to a static scene where only the camera moves, to avoid the additional work of transforming points using independent object matrices. Adding this flexibility shouldn't have any significant performance impact though.

Rendering is focused to areas of high contrast and "border conditions", which in this case essentially means moving edges between cube and background, as well as high-contrast areas of the texture (if you look closely, edges remain sharp while large featureless areas become blurrier in extrapolated mode).

Ideally, there would be some kind of general error estimate based on difference between measured world position at a raycast point vs the extrapolated position, and also difference between extrapolated color and raytraced (sparse) color samples. Without color error estimation there will be significant noise on dynamically lit surfaces. One problem with measuring this error is that you might get confusing results based on micro-variations in the surface due to textures and similar. It's probably feasible to add a separate raytracing path which excludes high-frequency detail and only deals with smooth flat surfaces, so sparsely sampled color values will be more relevant to estimating variations over time.

The next problem is how to update large surfaces which only undergo a slight widespread color shift, as would often be the case with dynamically lit objects. You'd not want to fully render every pixel of the surface, but rather use the coarse color information to scale the pixels already present from earlier frames and extrapolation. This might be tricky but should be possible. You could scale by the delta color, for instance - so you wouldn't need to know any details about the original high-frequency color channels, you just bump them up or down according to how the smooth surface changes in color. So if you shine a red light onto a plane, the plane will become redder regardless of its previous appearance. Like the spatial extrapolation, this will just have to maintain reasonable accuracy over the span of maybe 10 frames before it's updated by one of the re-rendered tiles which are constantly sprinkled onto the screen at random.

There is potential for speeding the process up using GPU hardware, since a big part of the extrapolation process is based on bilinear lookups.

The current implementation uses two concurrent threads for rendering.

Both extrapolated and full-screen raytracing is multithreaded, to make for a fair comparison. The actual raytracing isn't very optimized at all though, and in fact the method will be more effective with increased computational load per raytraced pixel (particularly surface computation, as opposed to geometric raycasting).

Partial source is included for the brave and curious.

extraypork.zip (226 kB) extraypork.zip (226 kB)

Back to all projects

|

|

|

|

|

|

|

|

|

|

|